Image taken from: https://arxiv.org/abs/2310.16410

Concepts Discovered by AlphaZero

Looking at a paper from DeepMind about concept discovery and transferThe most fascinating part for me about neural network engines like AlphaZero and Leela Chess Zero is that they learn chess from scratch. This means that they don't have human biases and might "see" chess very differently then we do.

So after I saw that researchers from Google's DeepMind have published a paper last year titled "Bridging the Human–AI Knowledge Gap: Concept Discovery and Transfer in AlphaZero", I had to take a closer look at it.

As the title suggests, the authors first extract chess concepts from AlphaZero. Then they search for positions where certain concepts are present and try to teach them to grandmasters. They worked with grandmasters Vladimir Kramnik, Dommaraju Gukesh, Hou Yifan, and Maxime Vachier-Lagrave.

Concept Discovery

The first step is to figure out when a concept is present in a position. To do this, the authors used two datasets of positions for each concept, one with positions where the concept is present and the other one with positions where the concept is absent. The concepts they used were the presence of a certain piece types, the concepts built into Stockfish (like open files and space), concepts from the Strategic Test Suite and different openings.

Using these datasets, they then extracted vectors that represent the concept in the neural network of AlphaZero. After they had a representation for existing human concepts, the authors extracted concepts from AlphaZero which were new.

To do this, they looked at games played by AlphaZero and chose positions where an older version of AlphaZero (rated 75 Elo points lower) chose a different move than the current version. This was done in order to ensure that the concepts are learned at a late stage in training and therefore more complex.

To understand the new concepts from AlphaZero's games, they built a graph to see how the concept relate to the human understandable concepts. As an example, take a look at the following two positions in which the same concept occurs:

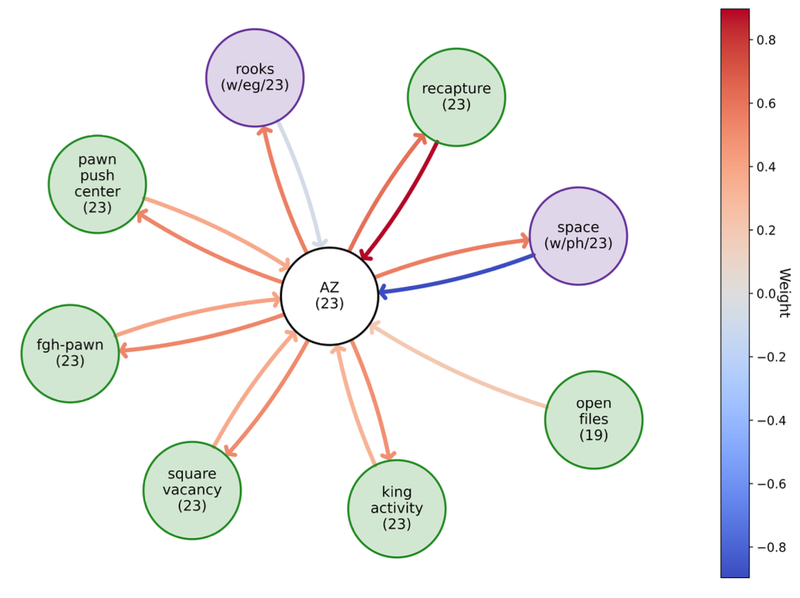

The authors show a part from the concept graph to see how this concept is related to human understandable concepts:

The green concepts are from the Strategic Test Suite and the purple concepts are from Stockfish. The AlphaZero concept from the positions above have a strong relationship with the recapture concept and the space advantage concept (for white).

Finally they filtered the concepts to only include ones that were deemed to be teachable and novel.

If you enjoy this post, check out my Substack.

Teaching the Concepts

To see if the concepts are teachable to the grandmasters, the authors first sent the grandmasters positions where they were asked for their thoughts and had to give the move they would play. Then the GMs got the solutions from AlphaZero, which contained the mainline and a few variations that were relevant. Finally, the players got more positions with similar concepts and their performance was compared to the first set of positions. In total, each player saw 36 to 48 chess puzzles.

Each player performed better on the second set of positions, which might suggest that they learned the concepts from AlphaZero.

However, I'm unsure how much actual learning of the concepts occurred. Take the following example provided in the paper (which I already showed above):

Here, 9.Be3 would be a natural move, but AlphaZero wanted to play 9.Bg5 to induce the weakening move 9...h6. After 9.Be3 Nxf3+ 10.Qxf3 Nh5 followed by 11...f5, Black has a good position, but the inclusion of 9...h6 makes the f5-advance less strong. Another justification for the inclusion of 9.Bg5 is the following line:

9.Bg5 h6 10.Be3 O-O 11.Nxd4 exd4 12.Qxd4 Ng4 13.hxg4!!

Compare this to the same variation without the inclusion of Bg5 and h6:

There is only a small difference in the position, but White has the advantage with the pawn on h6, whereas Black is much better with the pawn on h7.

I think that humans often know a concept and spot it in a position, but might not play the move because they miss a critical move somewhere in the variations. Also weighing up different ideas is often more difficult than finding the ideas in the first place. So the reason why the grandmasters missed a certain move might have more to do with their lower calculation ability compared to AlphaZero rather than the knowledge of the concepts.

Conclusion

The paper was very fascinating to me. Especially the concept discovery was interesting and in my mind opens many possibilities. It would be amazing if engines could identify the various concepts in a given position. This would help with classifying positions and makes it easier to find the strengths and weaknesses of a player. Moreover, one could also work on their weaknesses more easily since they can quickly find positions with the concepts present and study them.

The authors also included some more examples in the paper, some of the positions and variations are simply amazing. Let me know what you think about the paper.

You may also like

Lichess

LichessAccomplished Bangladeshi GM Ziaur Rahman Dies Mid-Game

Lichess extends its condolences to the family, friends, and many fans of GM Ziaur Rahman, who died a… jk_182

jk_182Do Attacking Players play more Forcing Moves?

Finding forcing moves and looking through the games of world champions jk_182

jk_182Finding Pawn Breaks automatically

Difficult concepts like pawn breaks are best understood when looking at many examples. But finding t… CM HGabor

CM HGaborHow titled players lie to you

This post is a word of warning for the average club player. As the chess world is becoming increasin… jk_182

jk_182How the World Cup Format impacts Results

Taking a look at all matches from previous world cups jk_182

jk_182