@NDPatzer (Ben Balas)

Science of Chess - How many kinds of chess ability are there?

A look at using some basic data science to see if Bullet Chess is really the same thing as playing slower.As a middle-school player back in the 90's, the only time control I knew about was Classical. I'm pretty sure we played G30/D5 (30 minutes per player, with 5 seconds before you start using up your real time per move) at most of our club tournaments and the only Blitz games I played were between rounds, with more emphasis on fun and flailing around than actually managing the clock. My reintroduction to the game via Lichess thus opened up the world of Bullet, Blitz, Rapid and Daily chess and for the first time I had to think about how or more specifically how fast I wanted to play. In OTB play as a middle-schooler, I loved using my schmancy chess clock at tournaments even though we rarely ran into time trouble. What would it be like to play in these formats where time trouble practically defines the whole game?

Michael Hofmann, Kitzingen., CC BY-SA 3.0 <http://creativecommons.org/licenses/by-sa/3.0/>, via Wikimedia Commons

My first games online were 30-minute Rapid games because that's how I remembered playing chess. I kept it up for a while, setting aside time in the evening to sit down and focus on one game for an hour or so. Before long though, the siren song of the faster time controls caught up with me and I made my first forays into Blitz and Bullet. As I'm sure many others have experienced, my first Bullet games felt like total madness. I couldn't fathom making decisions that quickly and I was stunned into inaction by players who could just keep moving. Blitz was marginally better, but still not what you'd call good by any stretch of the imagination. I backed off of the fast time controls again and went back to my 30-minute games, secure in the advice that I was seeing everywhere that longer games were better for learning. Surely if I wanted to get better at the game I should stick with these longer sessions and ignore this unbelievable stressful 2+1 or 3+2 stuff.

Too many options? Maybe. Just maybe.

But then I got to thinking about what I was trying to learn and how best to do it. In particular, I was trying to build up more familiarity with a range of openings because it felt like I kept getting surprised with stuff I only sort of knew about but certainly had no plan for (When did everyone start playing the Vienna Game and the Caro-Kann, for example?). On one hand, playing 30-minute games gave me a chance to think through what to do carefully and weigh my own candidate moves against my opponent's with some deliberation. On the other hand, the speed of Bullet and Blitz offered something Rapid couldn't: Volume! In the time I set aside for one long Rapid game, I could play 10-15 faster games, which meant 10-15 chances to see new stuff and try out my responses. Bullet and Blitz started to feel like maybe they could be a prototyping lab where I could get lots of exposure to different things and maybe learn more quickly through sheer mass. Besides the fact that I was starting to get used to playing quickly (and enjoying the potential to squeeze a quick game in when I had no time for something longer), I was also starting to wonder if the advice about playing slowly to learn was wrong, or at least belied a complexity to the question of how to learn to play.

There's another issue to consider, too: Does learning transfer between time controls? This is a question that comes up all the time in a range of different learning experiments in cognitive science - sure, you can train someone to perform a particular task more quickly, more accurately, or however you want to measure performance. That part isn't always that interesting or surprising - what's more interesting is finding out that getting better at one thing tends to also make you better at something else, a phenomenon that we call "transfer." In a way, this is fundamental to a lot of different kinds of training you probably have some familiarity with, like a strength-training plan at the gym or a physical therapy regimen designed to help some recover after an injury. We don't engage in these activities just so we can get better at the bench press specifically, or walking down the one ramp that might be installed at a therapy center. What we're hoping is that doing the bench-press a lot will make us better able to do a range of tasks that depend on our muscles, or that practicing with that ramp after an injury will help us deal with staircases or slippery terrain later. In both cases, we have some intuition that this should work because we understand that there is a broader ability that each training exercise targets and that applies to other things: The bench-press? It helps build something called strength. That ramp at the therapy center? It helps improve balance. Because we have a sense that this broad ability exists, we feel confident in the potential for transfer between one kind of training and a different kind of test. A fundamental question we often encounter in cognitive science however, is how to group different kinds of abilities together under broad categories like this and how to know when it makes sense to do so. To put it another way, are we sure that Bullet Chess is actually the same thing as Rapid Chess?

Naively, it sure seems like they are. They use the same pieces, have the same rules, and only differ in terms of how much time you have to do the same activity. That said, I think anyone who has played both very fast and very slow time controls has a real sense that they don't necessarily feel like the same game, though. My approach to Bullet and Blitz sometimes has an element of desperate aggression that my Rapid play hardly ever does: If I start seeing my Bullet position disintegrate, I might just start bullying my opponent with wild attacks in the hopes that it just kind of destabilizes them enough that I can either flag them or push them into blundering. In Rapid, I'm much more likely to look at the same position and start to look for ways to turn a weaker position (being down an exchange, for example) into an asymmetry that I can try to make work for me (closing down the center to make knights more nimble than rooks). If you poke around enough chess forums, you can find a lot of threads expanding on these differences, often with bold claims that Bullet and Blitz aren't "real" chess, or at the very least that they're simply different games played with the same pieces.

A small sample of reasoned debate on the topic.

The idea that fast vs. slow cognition fundamentally differ from one another is an idea with a good pedigree. One of the most notable and eloquent advocates for this position has been Daniel Kahneman, who introduced and popularized a dual-processes take on decision-making that referred to"System 1" vs. "System 2" thinking. System 1 is fast, relies on simple rules of thumb (heuristics and biases), and may not involve much conscious though. System 2 is slow and takes conscious effort, by comparison, and as a result may involve circumventing some of those quick-and-dirty rules that System 1 relies on in a reflexive manner. When people talk about "pattern recognition" in chess, or situations where they just sort of feel like something might be the right move, that's more in alignment with System 1 decision-making. When they talk about calculating and recalling long lines or previous games with similar positions, that's much more compatible with System 2. This all starts to sound like a Bullet vs. Rapid kind of divide, right? If it turns out to be, there may be important consequences for learning to play chess at different time controls: Training Bullet performance (System 1) might not contribute much to Rapid abilities (System 2), or vice-versa! To be sure, it's not like these two ways of making a decision are completely independent, especially because anything that takes a long time includes sufficient room for something fast to happen. Still, it could be that they are sufficiently different that training one doesn't sharpen the mechanisms you need for the other - maybe you can calculate consequences of some move wonderfully if you have a few minutes to do it, but haven't committed enough patterns to memory so that you have that quick reflexive sense that this is the move to make. Or maybe you have some decent intuitions about how to respond with a good move based on pattern recognition, but don't have the visualization skills to find the great move your opponent can see.

How do we find something like this out? What we want to know is something like whether or not there is a common ability like strength or balance that any time control can help improve (we could call that ability - I dunno, chess) or if there are different abilities for each time control that have to be trained on their own (fast chess and slow chess). One way we could look at this is to do an experiment - take some chess novice and randomly assign them to train at each time control for some amount of time, and then see how they do across the different formats afterwards. This would be cool, but also has some fiddly issues to consider: Should we match the number of games played, the amount of time played, or something else? It's also just tough to pull off, so I want to take a different approach here, one that we can use without having to do any recruitment or testing at all. We're going to use a data analytic technique called *factor analysis*to see what we can find out about the mechanisms that support chess playing.

The key idea in factor analysis is to look at multiple measurements (or variables) for each of a large number of samples and examine how those measurements co-vary to identify a new way to explain the data using combinations of our original variables. That's a lot to unpack, so let me try and walk you through what our goal is with an example. Imagine that we encountered some kind of alien organism that we'd never seen before and we set about measuring lots of stuff about each little being that we found. Based on the kind of stuff we're used to measuring for terrestrial organisms, let's say that we start by measuring the height, the width, the weight, the color, and the temperature of each alien being. That gives us 5 numbers per being and these are our original variables. The idea behind factor analysis is to examine the relationships or correlations between all of these variables - when one of the numbers changes, what tends to happen to the others? You might not be surprised, for example, to find out that a taller being tends to also be a heavier being - that's one pairwise correlation between two of our variables that's pretty straightforward to understand, and what we want to do is think about all of those possible pairwise correlations between variables to learn how each variable contributes relative to the others to making each data point different than the others (the variability in the data).

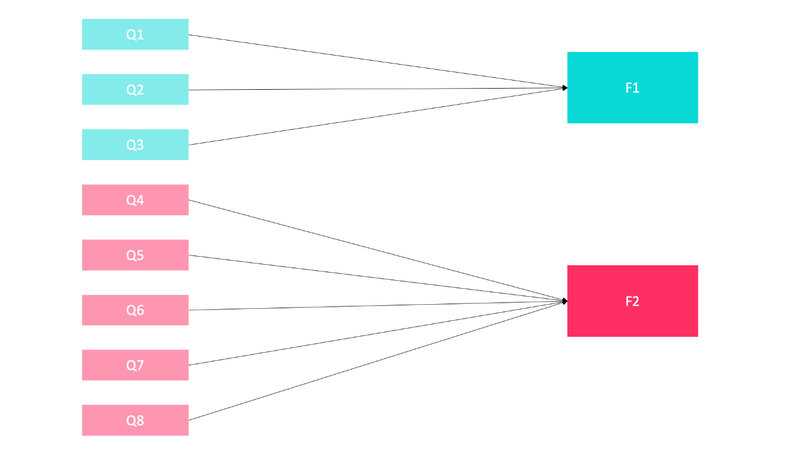

Now, here's where we get to the interesting part of factor analysis: Imagine that after we look at our 5 numbers, we find out something interesting about the correlations between our variables across the sample of beings. Let's imagine that height, width, and weight are actually perfectly correlated with one another - knowing one number means you can exactly predict either of the others. Likewise, let's imagine that color and temperature have the same relationship - perfect correlation so that knowing one of the numbers let's you work out exactly what the other one is for every being in our sample. Finally, let's imagine that color/temperature have nothing to do with height/width/weight values - you can't predict anything about the color for knowing what the height is, for example. What do those patterns of co-variation mean in terms of factor analysis? A simple way to think about it is to say that the perfect correlations between height, width, and weight mean that we don't need to bother measuring all three things about our beings: Any one of 'em would tell you about the others, so why bother collecting all three? Instead, we can get away with thinking about those variables in terms of a common factor that we might call size - three numbers that we can collapse into just one. Likewise, the perfect relationship between color and temperature means that we can think about these in terms of some other common factor we might call warmth. The fact that size has nothing to do with warmth in terms of covariation/correlation means that we can't do much more to condense this data set into a smaller number of measurements - our data has two factors or latent variables in it, and these offer a new, simpler language for describing our data and also helps us think about a better way to talk about what makes these little critters different from one another. They vary in size and warmth, so we may as well talk about them in terms of those things instead of our original set of variables. The image below is a schematic view of what we're doing - grouping our original measurements into a more compact description that helps us understand what aspects of the data belong together and which ones don't.

Ahn T. Dang, https://towardsdatascience.com/exploratory-factor-analysis-in-r-e31b0015f224

Alright, back to chess - the idea then is to do something similar for measurements of chess performance across different time controls. If we have data for how good players are at Bullet, Blitz, Rapid, etc., what patterns of covariation do we observe in that data and how many factors do we end up with? Is there just one big factor that explains all of the variation across different players, or can we group performance at different kinds of chess into some different set of latent measurements? There are a lot of data analytic questions that I'm eliding here that matter a lot: There are many decisions to make that I'm not going to bother covering here that involve different techniques for choosing the best number of factors, the way we measure error between the model we're trying to fit to the data and the data itself, and a bunch of other things. In the interests of taking a first pass at this question, I'm just going to crank through a factor analysis quickly and talk to you about what we see.

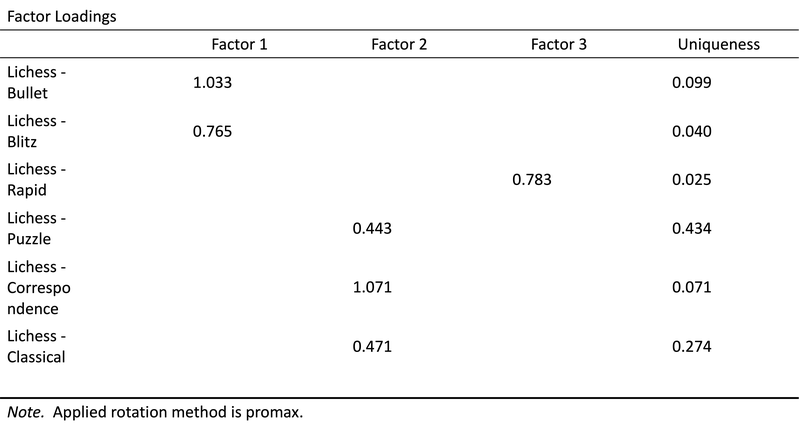

I went to https://www.chessratingcomparison.com/, which is maintained by David Reke (daviddoeschess), and includes a downloadable database of ratings data from thousands of chess players. In particular, it includes Bullet, Blitz, Rapid, Classical, Correspondence, and Puzzle ratings for players here on chess.com and lichess.org. I ended up using the lichess data for this analysis because it had fewer missing datapoints, which helps increase our sample size. I loaded all of the data into JASP, which is a free application for statistical analysis and used its Factor Analysis tools to ask how many factors were best for explaining the variance in the data. The table below shows off the results of this analysis, including the 3 factors, or latent variables, that the analysis recovered and the loadings of each original variable onto those factors.

Factor Analysis loadings for Lichess ratings

The first thing to say about this is that the 3 factors we ended up with represent a reduction in the number of variables from what we started with (6!). That means that there are meaningful patterns of covariation between our measurements. What are those patterns and what do they mean? Like our hypothetical alien beings, we can look at the loadings and think about what they mean in terms of some new descriptions of the data. Our first factor loads Bullet and Blitz performance onto the same latent variables, which is a way of saying that knowing one number tells you a decent bit about the other, but not about other aspects of ability. I'm going to suggest that we might call this factor something like reflex-chess ability. Our second factor loads Classical, Correspondence, and Puzzle performance onto the same variable, which maybe looks a bit like a deliberative-chess ability. Finally, the third variable has Rapid performance all by it's lonesome, suggesting a sort of intermediate range of play - which we might call blended-chess*.* To be clear, I'm making up the names here - these are just my readings of what those groupings might mean. What's neat is seeing across hundreds and thousands of players that the data maybe suggest that there are something like 2 to 3 unique kinds of chess ability rather than just one.

The implications of this at a surface level are that it's easy to predict chess abilities across different time controls within these factors, but not as easy across them. Knowing how someone is at Bullet can help predict how they'll be at Blitz, but neither will help you make as good a guess about their performance at either Rapid games or Puzzles. At a deeper level, I think this suggests that it's probably worth thinking about what players are doing differently in these time controls in terms of unique cognitive mechanisms: When playing Bullet, what processes are relied upon that aren't used so much in Classical play? What are the tools you use during Puzzle solving that you don't bring to bear during Rapid or Blitz? To my mind, these are neat questions both to think about training strategies and also for understanding how we use our minds differently as a function of how quickly the chess clock runs down. So how many kinds of chess ability are there? I don't want you to think that the answer is exactly 3 based on this quick-and-dirty bit of data analytics, but I also think that even this cursory look at the data suggests that the answer also probably isn't just one! I'm excited to think about this more and dig into some more of the literature about how different cognitive mechanisms contribute to playing the game in different ways, so stay tuned.

Support Science of Chess posts!

Thanks for reading! If you're enjoying these Science of Chess posts and would like to send a small donation ($1-$5) my way, you can visit my Ko-fi page here: https://ko-fi.com/bjbalas - Never expected, but always appreciated!

You may also like

NDpatzer

NDpatzerScience of Chess: What does it mean to have a "chess personality?"

What kind of player are you? How do we tell? NDpatzer

NDpatzerScience of Chess: Networks for expertise in the chess player's brain

Chess can be an important way of investigating how learning and expertise are manifest in the nervou… thibault

thibaultHow I started building Lichess

I get this question sometimes. How did you decide to make a chess server? The truth is, I didn't. FM CheckRaiseMate

FM CheckRaiseMateHow Elo Ratings Actually Work

The history, mechanics, and issues of the chess rating system FM CheckRaiseMate

FM CheckRaiseMateIs Chess A Waste Of Time?

Paul Morphy quit chess. Did he make a mistake? NDpatzer

NDpatzer