github.com/piazzai

Do Variants Help You Play Better Chess? Statistical Evidence

You spent hours racing kings, nuking knights, and fending off pawn hordes. Cool! But will any of this help you win a game of standard chess?A frequently asked question among Lichess users is whether spending time on variants makes you better at playing standard chess, and if so, which variants are most helpful to improve the quality of standard play. This question crops up so often in the Lichess forum that, one user remarked, it outs whomever asks it as someone new to the community. Yet the community continues to find it engaging as people are always eager to share their views on particular variants and discuss their consequence for standard games.

Being curious about the question myself, I decided to scour the forum for possible answers. I found an astonishing variety of opinions from titled players, amateurs, variant aficionados, skeptics, converts, standard loyalists, and everything in between. I was however disappointed that no one, on either side of the discussion, provides actual evidence in support of these opinions. The discourse on variants' benefits for standard chess is just a whirlwind of subjective impressions.

Being more interested in facts, I decided to change my approach. I downloaded the records of over 103 million games from the Lichess database and used statistics to figure out whether playing variants makes you more or less likely to win a standard game. Below is a report of what I found. The data say that variants do indeed affect your standard chess performance, but nearly everyone on the forum has it wrong!

Is training for a mile going to hurt your two-mile?

Any Lichess user must have wondered at some point if Antichess, Crazyhouse, King of the Hill, and other variants available on the website could be useful to improve at standard chess. Trying to get some clarity on this issue myself, I thought the Lichess forum would be an obvious place to look. A quick search returned a lot of interesting discussions, but I was especially intrigued by one titled Playing Variants Makes You Weaker, which includes comments by high-rated variant players. These people spend days on end in variant games, so they should know better than anyone how playing variants affects one's standard chess performance. Here is a sample of what some of them think:

There's probably some merit to improving at standard by playing any variant, as each one focuses on some aspect of standard just in a different form. For instance, crazyhouse could help with dynamic positions (or just middlegames in general), KOTH and antichess could help with taking space advantages, three check could help with defending, etc. However, I don't think these help as much as just playing standard, learning openings, practicing endgames, or puzzles. (Source)

Variants improve your game sense in a lot of ways, and most games are super transferrable with chess. As I am a competitive runner, I like to compare this to track events. Is training for a mile going to hurt your two mile? Or your 5km? It won't hurt it, but there will come a time where it'll stop helping. (Source)

Variants are VERY helpful. They shift your thought process quite a bit and make you relearn some stuff from a different perspective. Even though I am far from an expert in those variants, crazyhouse and three-check have both taught me quite good things simply because I get out of my comfort zone when playing them. (Source)

High-rated players of standard chess might not spend nearly as much time on variants, but they also find the question intriguing. GM Levon Aronian is often quoted for saying that Chess960 is "healthy and good for your chess," and practicing it seriously "really improves your chess vision." His opinion is fairly widespread in the chess world, and FIDE's patronage of the variant's World Championship, attended by the likes of Carlsen, So, Nepomniachtchi, and Caruana, lends it further credibility. It was dutifully echoed in the forum by FM Jens Hirneise, who also commented on other variants:

If anything might help your Standard chess, then Chess960. Three check doesn't help you in normal games because all those manoeuvring only exists because of the Three check goal. Also arguing about Horde helping learning to understand pawn structures is no comparison to comparing pawn structures in standard games. (Source)

Wait, wasn't every variant supposed to help in its own way? I thought Three-Check could teach me how to spot pieces converging on my king, but Hirneise seem unconvinced. While the benefits of Chess960 are practically taken for granted, those of other variants are very much in doubt. An anonymous FM also expressed skepticism:

I would go as far as to say that variants that strongly differ from standard chess like Horde and RK do not affect a player's chess skills at all because it is easy to distinguish these variants from standard chess. A problem that I have come across when playing variants like Atomic or Three-Check is that standard games will be affected by forgetting that capturing pieces do not disappear or by starting really unsound attacks. That problem vanishes after a couple of days of not playing variants though. (Source)

This is starting to get complicated. Players who are expert in variants say that Three-Check helps defending, while those who are expert in standard say it makes you start unsound attacks. In the end, does it do more harm than good? How does it compare to Chess960 in terms of benefits for standard play? And are more freaky variants, like Racing Kings and Horde, truly inconsequential? People like to make strong claims about these issues but there is no expert consensus. And when looking at more casual players, lack of consensus turns into confusion as the sheer amount of users means that every possible opinion makes its way onto the table. Here are two radically opposite takes:

Three check improves manoeuvring your pieces and attacking the king. Crazyhouse improves attention and concentration, and ability to spot strengths and weaknesses. Chess960 involves ability to deal with unfamiliar positions. Horde improves learning about pawn structure, the strengths and weaknesses of pawns such as isolated (weak), passed (strong) or doubled (sometimes strong, sometimes weak). All the rest except antichess don't have any effect at all. Antichess has a negative effect! (Source)

In my opinion, no matter what the variant is (except for chess960) it will be bad for improving your game. A couple of months ago I started playing three-check chess quite often which resulted in weeks of bad form. People may disagree but I do not play variants anymore. (Source)

It could be that casual players miss the requisite self-awareness to realize how spending time on variants makes a difference for standard chess performance. But to be fair, expert players do not provide a consistent account either. Some say that variants help, others say they're useless or counterproductive, and in any case no one provides a shred of evidence beyond their own anecdotal experience. As a middling player with an interest in variants, I wanted to sift community opinions to arrive at some verifiable truth, but I could only find presumptions, hunches, and impressions. Therefore, I turned away from the forum and asked my question to the Lichess database.

Letting data speak: A statistical model of chess wins

If it is hard to get an answer from members of the Lichess community, perhaps it is easier to extract one from the millions of games they play on Lichess everyday. In fact, searching for trends in the data would be worth it even if people's opinions weren't so inconsistent, because words are cheap and game statistics speak much louder. If spending time on a particular variant influences how well people perform in standard chess, then there should be a positive or negative relationship between the number of games recently played in that variant and the probability of winning a standard match.

To test if such relationships exist, I downloaded the PGN files of 103.84 million rated games played in January 2022 across all variants, namely Antichess, Atomic, Chess960, Crazyhouse, Horde, King of the Hill, Racing Kings, Three-Check, and standard chess. I identified all users who played at least one standard game between January 10 and 30. Then, for each user, I randomly selected one standard game played within this three-week period that satisfied the following conditions.

- The game ended by draw, resignation, or checkmate. This excludes games that ended because one of the players left the board, violated the rules, or ran out of time. A large number of games end because of time, but the information I want lies primarily in games that end by good or bad moves.

- The game was not a correspondence game, as the long duration of such games makes it difficult to assess if recently played variants had any impact on the result.

- The game did not involve bots. I want my results to be applicable to humans playing other humans. Perhaps games involving bots are not inherently dissimilar, but bots will not be reading this blog post anyway and do not care about my question.

- The difference between the two player's ratings was not extreme, where extreme means in the outer 5% of the distribution. It is important for my analysis that players are evenly matched: a large gap between their ratings, as in the case of a titled player taking challenges from amateurs, makes the game unrepresentative.

- The change in either of the player's ratings after the game was not extreme, where again extreme means in the outer 5% of the distribution. This is because big swings indicate a rating is provisional and the players may not be evenly matched.

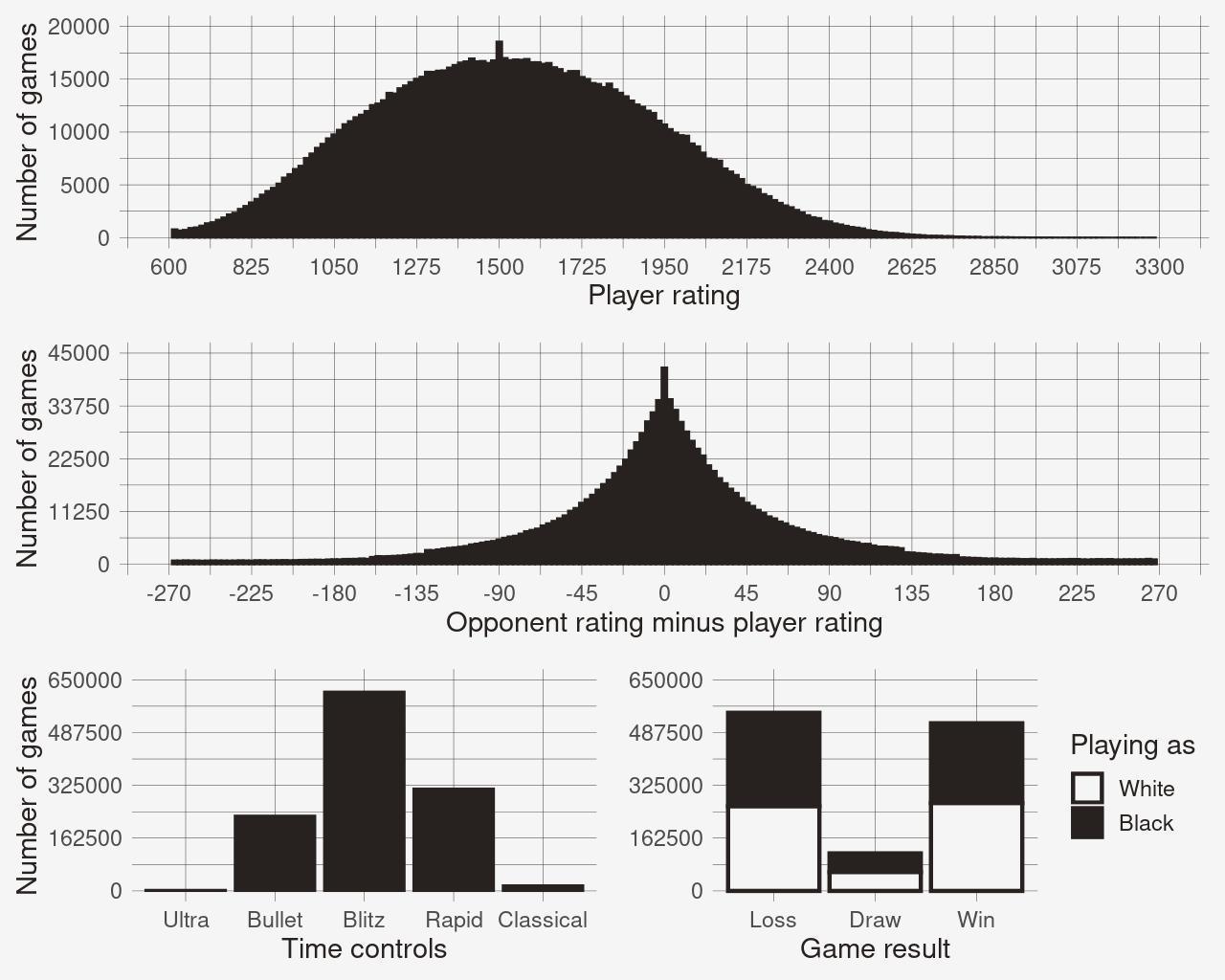

I ended up with a sample of 1,180,193 games viewed from the perspective of one of the two players, either black or white. I recorded the outcome of the game as a win, loss, or draw for that player. I also took note of the player's standard rating, the opponent's standard rating, and the time controls. Because I only selected one random game per user, more active players do not bias the sample. Here is a summary of the data:

Distribution of ratings, rating differentials, time controls, and outcomes in the sampled games

I used logistic regression to predict the odds a game was won by the focal user, as opposed to being drawn or lost. Odds are a probabilistic construction that can be a bit tricky to interpret, but they are closely related to probabilities. They are not quite the same as probabilities: for example, odds of 1:2 mean that an event is expected to occur once in three times. This is not the same as a probability of 1/2, or 50%, which means that an event is expected half the time. Probabilities and odds are linked in the sense that if one goes up or down, the other does the same. The logistic model deals primarily in odds, so we will reason in odds rather than probabilities.

As predictors of the odds of victory, I considered the player's standard rating, because higher-rated players are more likely to win; the opponent's rating minus the player's, because stronger opponents make it harder to win; the side played, because white has an advantage; and a series of variables that capture the player's recent game experience. These include the total number of games played in the past 168 hours, i.e., one week, and the share of games played in each variant during this one-week window. To account for players' familiarity with different time controls, I also considered the share of games played during this week at faster or slower controls.

My assumption is that, within the sampled games, players redeployed experience gained during the previous week. If time spent on variants had any effect, then the shares of variant games they played during this week should be predictive of their odds of victory. Of course, players might have actually redeployed experience gained during a lifetime of chess and not just the previous week, but as long as what they played during this week was consistent with their general habits, this makes no difference to the model.

Note that it is irrelevant whether the games played during this one-week window were won, drawn, or lost. This is an important feature of my model, because I am trying to understand if simply playing variants, and not necessarily being good at them, relates to standard chess performance. It is also irrelevant whether the games ended by time forfeit or any other means, whether they were played against bots, whether they were played against much stronger or weaker opponents, whether ratings were provisional, and whether they were correspondence games.

Playing variants makes you weaker

The logistic regression model returns estimates in the form of odds ratios. These are multipliers on the odds of winning that apply for each one-unit increase in the value of a predictor variable. For example, an estimate of 1.15 for the variant Atomic would suggest that a player's odds of winning standard games are 15% greater when the share of Atomic games played during the previous week increases by one. It does not matter what the player's odds actually are—if they are high because the player is skilled and playing white, low because the player is up against a much stronger opponent, or anything else—they would always be 15% greater for a one-unit increase in the predictor. Conversely, an estimate of 0.85 would suggest that the player's odds of winning are 15% smaller for the same increase. An estimate of 1.00 would mean there is no change.

Odds ratios above or below 1.00 do not necessarily mean that a change occurs, however, because all estimates come with a 95% confidence interval. The width of a confidence interval represents uncertainty around the estimate. If the value 1.00 lies within this interval, then any change in odds is statistically indistinguishable from null. In this case, there is too much uncertainty to claim that the predictor has any effect.

The full results of the model, including important diagnostics for possible estimation problems, are available on GitHub. These results are consistent with what one would expect: the odds of winning increase with the player's rating, decrease with the opponent's rating, and increase when playing white. They also increase when playing more games in total over the past week, and when more of these games are played with the same time controls as the standard game under analysis.

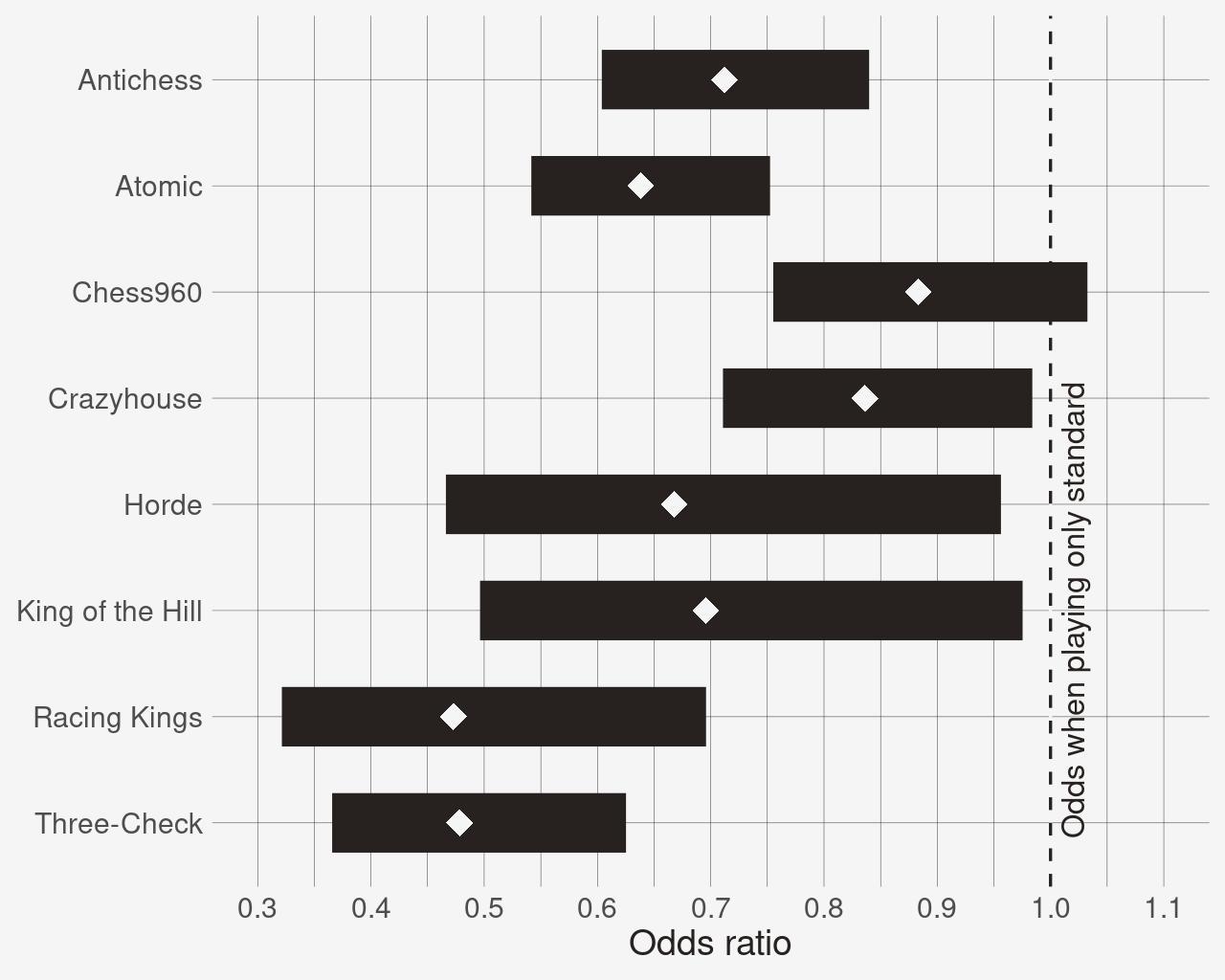

Below are the odds ratios estimated for predictors that represent time spent on variants. The white diamonds correspond to the estimates. The black boxes around diamonds correspond to their confidence intervals. The dashed vertical line represents the value at which time spent on variants is indistinguishable from time spent on standard. If a variant's confidence interval extends over this line, then there is not enough evidence that the variant affects the odds of victory one way or another.

Estimates of the logistic model, with 95% confidence intervals

Let us pause to consider this picture for a moment, as it contains the answer to my titular question. With the exception of Chess960, all variants are associated with an odds ratio lower than 1.00 and a confidence interval that does not cross the line. Therefore, except Chess960, all of them decrease the odds of winning a standard game. I shall repeat it again: apart from Chess960, all variants make you worse at standard chess.

To get a sense of these effects, recall that these predictors constitute shares of games played in a particular variant during the past week. They range from zero to one, where zero means 0% of games were in that variant, and one means 100% of them were. Any game not in the variant is estimated to be standard, because all other variants are represented by shares of their own, and standard is left as the remainder. Because odds ratios are linked to one-unit increases in a predictor, we are effectively comparing the odds of someone who played only the variant to those of someone who played only standard.

With this in mind, Three-Check and Racing Kings have by far the most detrimental effects. They decrease the odds of winning a standard game by 52.2% and 52.7% respectively. Antichess, Atomic, King of the Hill, and Horde have smaller but still important negative effects, decreasing the odds by 28.8%, 36.2%, 30.4%, and 33.2% respectively. There is considerably more uncertainty about King of the Hill and Horde than about Antichess and Atomic, but not enough to question that that their negative effects are real. Crazyhouse has a comparatively mild effect, decreasing the odds by "only" 16.4%.

For Chess960, the model indicates a decrease in odds of 11.7% but does not find this estimate trustworthy. The confidence interval crosses the value 1.00 that signifies no difference from the odds of someone who played only standard chess. The implication is that Chess960 appears just as good as standard chess for the purpose of improving your chances in a standard game.

Although these numbers describe the situation where 100% of a player's games were in a variant, the same results hold for any mix of standard and variant games. For example, if someone played 25% of games in Atomic and 75% in standard, the decrease in odds of victory would fall from 36.2% to 10.6%. This value can be computed by elevating the odds ratio of Atomic, 0.638, to the power of 0.25, which brings it closer to the 1.00 threshold. But because the confidence interval also shrinks in proportion, the estimate retains exactly the same statistical significance.

Conclusion

And so I reached the end of my small data-driven journey. I set out to understand whether spending time on variants makes one better at playing standard chess, and I wasn't disappointed. The answer returned by the Lichess database is fairly comprehensive, albeit different from what most would expect.

Based on the results of my analysis, nearly every opinion on the forum is misleading for one reason or another. Spending time on any variant never makes you better at playing standard chess, compared to spending time on standard chess itself. In fact, most variants tend to make you worse. If I were a bettor and could wager on one of two players, namely Alice, who played only standard chess during the past few days, or Bob, who mixed standard chess and variants, and these players were otherwise perfectly equal, I would not hesitate to put my money on Alice.

The only exception would be if Bob had been playing strictly Chess960 in addition to standard. In this case, I would just flip a coin. This makes Chess960 at least better than the other variants, but hardly "healthy and good for your chess."

That said, variants do not seem equally bad in terms of impact on your standard chess performance. There are three clusters: Racing Kings and Three-Check, which have the most severe effects; Antichess, Atomic, King of the Hill, and Horde, which have moderate effects; and Crazyhouse, which has a relatively small effect. Perhaps this is because Crazyhouse, like Chess960, is not too far from standard in its rules and goals. Three-Check also has similar rules as standard, but it changes the goals. Given that this variant is often believed capable of training one's ability to spot possible checks, it is quite remarkable that its effect is just as bad as Racing Kings, a variant where there are no checks at all.

Naturally, every analysis has its limitations. This is why we should never consider a question settled until many independent studies point to the same conclusions. Perhaps the most important limitation in this case is that I only looked at variant games played within a one-week window. It could be that longer exposure to a variant leads to different effects. As the reigning Lichess Racing Kings champion said in the forum:

Just "playing" variants is not how it works - you shouldn't expect anything with just a couple dozen games. The positive effect that everyone is talking about only occurs when you take a variant seriously, and study all aspects of the game for a longer time. Many of the people commenting here are experienced variant players, who have spent thousands of games and dozens to hundreds of hours studying their lost games and their variant in general. They've learnt what it means to better understand a chess game, and have been able to translate that experience to help them improve in standard chess too. (Source)

While being far from perfect, I hope that my analysis contributes to chess players' understanding of the merits of variants with some objective facts. These are, in my opinion, sorely missing in current discourse. These results don't take anything away from the fact that variants are fun and should be played for their own sake.

If you have any questions or feedback, please do not hesitate to get in touch!